About

I am a passionate Data Engineer with practical experience in building scalable data pipelines, cloud data platforms, and big data processing. My expertise includes Python, SQL, Apache Spark, ETL, and cloud solutions across AWS, Azure, and GCP. I'm eager to work on challenging data problems and transform raw data into meaningful insights.

Data Engineer

Strong experience in building data pipelines, cloud architecture and data analytics platforms.

- LinkedIn: linkedin.com/in/akhil-bonthinayanivari-b66319234

- Phone: +1 470-940-8044

- Email: akhilme008@gmail.com

- Degree: Master in Data Engineering

- City: Dallas, Texas, USA

- Opportunities: Available

Skills

I specialize in building data pipelines, big data processing, cloud-based data solutions, and machine learning workflows. My expertise spans across multiple cloud platforms and data engineering frameworks.

Resume

I am a Full-Stack Data Engineer with hands-on experience in building scalable data pipelines, full-stack applications, cloud platforms, and big data processing systems. Skilled in Python, SQL, Django, Apache Spark, and cloud platforms including AWS, Azure, and GCP.

Professional Summary

Akhil Bonthinayanivari

Versatile Full-Stack Data Engineer with hands-on experience in designing scalable data pipelines, building full-stack web applications, and deploying cloud-native solutions. Proficient in SQL, Python, Django, and big data technologies like Apache Spark and Airflow. Experienced with cloud platforms including AWS, Azure, and GCP. Strong in both frontend and backend development, with a focus on clean architecture, automation, and high-performance data processing. Proven track record across industries, including internships at Accenture, Cognizant, and DriverAI.

- Dallas, Texas, USA

- +1 470-940-8044

- akhilme008@gmail.com

Education

Master in Data Engineering

University of North Texas, Denton, TX

Bachelor in Computer Science

Madanapalle Institute of Technology and Science

Diploma in Computer Science

SV Government Polytechnic

Professional Experience

AI/ML Engineer

Jun 2025 - Present

DriverAI

- Developing AI-powered data products combining computer vision and real-time inference using PyTorch and TensorFlow.

- Built and deployed web-based dashboards for model performance tracking using Django and TailwindCSS.

Data Engineer

Jan 2024 - Feb 2025

Cognizant

- Developed distributed data pipelines using PySpark and AWS Glue for transforming large-scale datasets.

- Orchestrated workflows with Apache Airflow and optimized ETL jobs feeding into Redshift and Snowflake data warehouses.

- Implemented CI/CD for data pipeline deployments using Docker and GitHub Actions.

Full-Stack Data Engineer

Jun 2021 - Jun 2023

Accenture

- Built scalable ETL pipelines and integrated backend services with frontend dashboards for analytics.

- Created REST APIs with Django and Flask to expose processed data to internal stakeholders.

- Designed data models and implemented data validation across PostgreSQL and NoSQL databases.

Full-Stack Developer Intern

Jun 2019 - Jun 2020

Cognizant

- Developed internal tools using Django for CRUD operations and data visualizations.

- Built responsive frontend components using HTML, CSS, JavaScript, and integrated REST APIs.

- Gained experience in full-stack application lifecycle, including testing and deployment.

Skills

- Python, SQL, Django, Flask, HTML, CSS, JavaScript, TailwindCSS

- Apache Spark, PySpark, Airflow, dbt, ETL/ELT pipelines

- AWS (S3, Redshift, Lambda, EC2), Azure Data Factory, GCP (BigQuery)

- PostgreSQL, Cassandra, MongoDB, Snowflake

- Docker, Git, REST APIs, TensorFlow, Scikit-learn

Projects

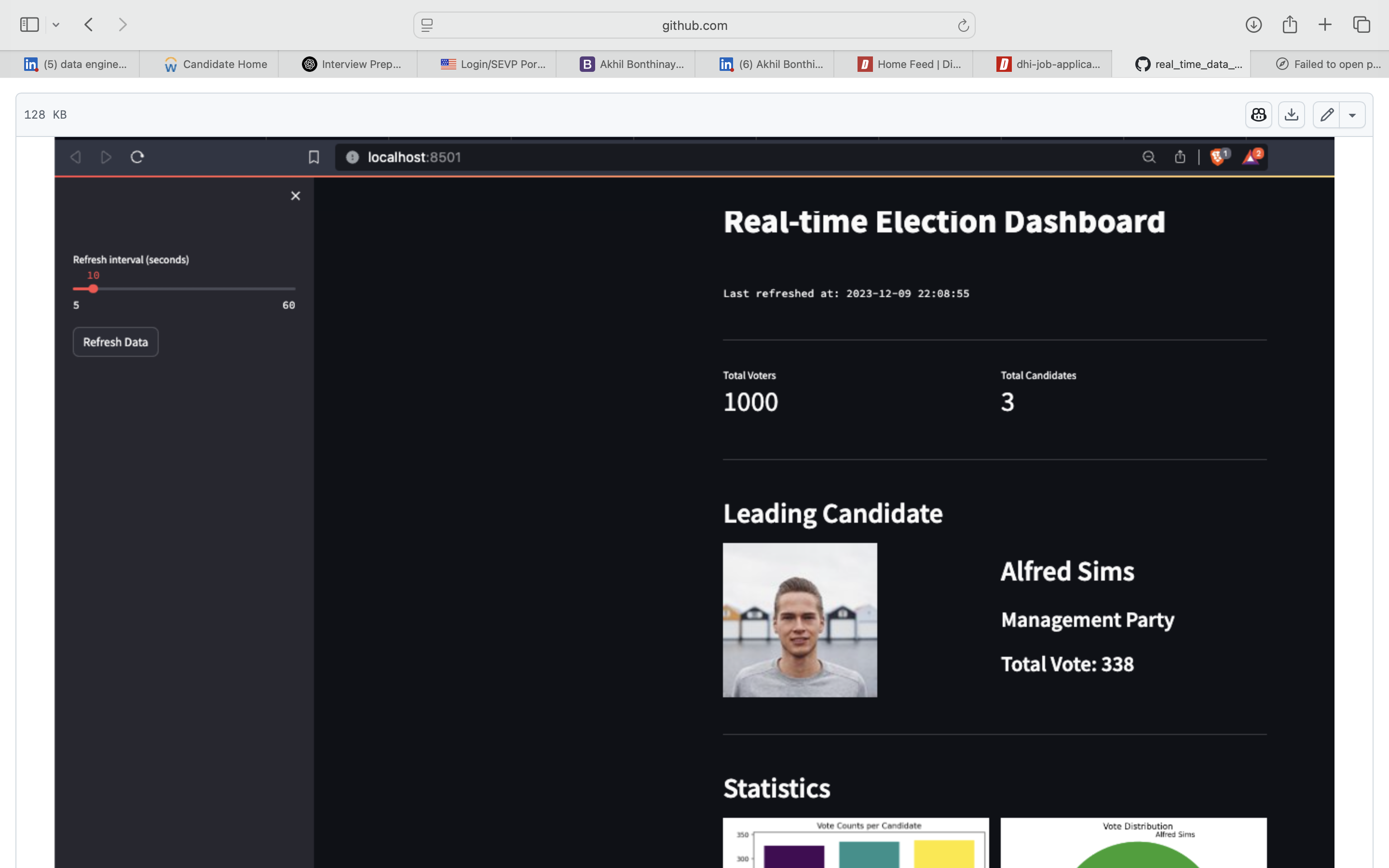

- Real time voting data engineering project - This repository contains the code for a realtime election voting system. The system is built using Python, Kafka, Spark Streaming, Postgres and Streamlit. The system is built using Docker Compose to easily spin up the required services in Docker containers.

- RealtimeStreamingEngineering - Data Pipeline with Reddit, Airflow, Celery, Postgres, S3, AWS Glue, Athena, and Redshift

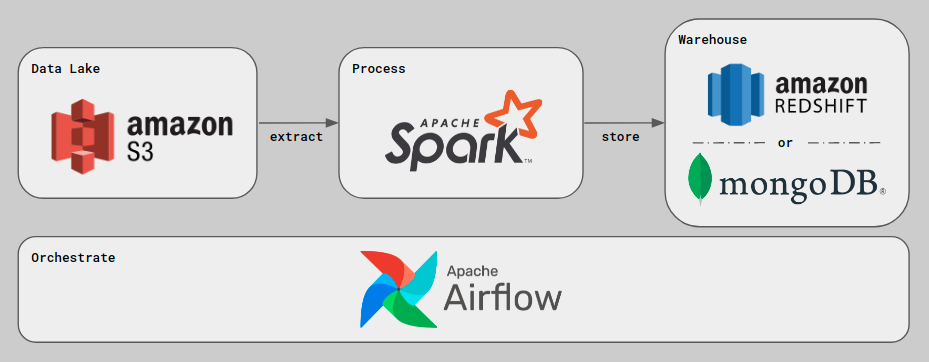

- Sparkify ETL Pipeline with Airflow and Redshift - This project builds a complete ETL pipeline to ingest Sparkify’s music data into an AWS Redshift data warehouse. It uses Apache Airflow for orchestration and scheduling. Data is extracted from AWS S3, transformed using custom Python scripts, and loaded into a star schema in Redshift to support analytical queries about user activity and song preferences.

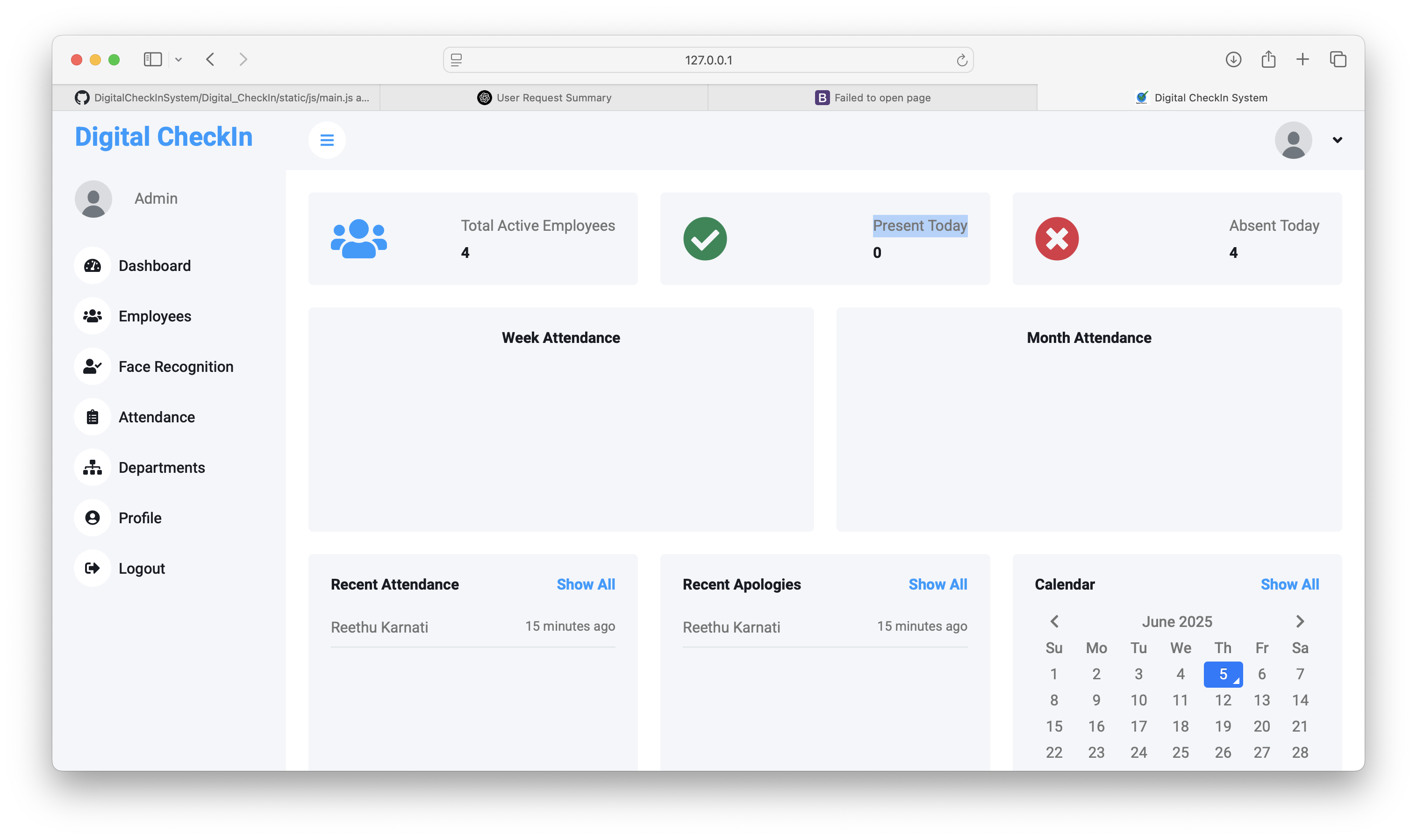

- Digital CheckIn System - A complete employee management and attendance system built with Django. The application allows employee registration, face recognition-based attendance marking, leave request management, department management, and admin dashboards for full control. Employees can update their profile, while admins can monitor attendance records, approve leave requests, and manage employee data efficiently.

- Full-Stack Django Personal Portfolio with AWS Deployment - Designed and developed a full-stack personal portfolio website using Django as backend and Bootstrap 5, AOS, and custom JavaScript libraries for interactive frontend. Implemented complete CRUD operations for dynamic project management using Django Admin interface. Integrated media upload functionality for project images and GitHub repository linking for each project entry. Enabled real-time category-based filtering of projects using Django ORM, slugify filters, and Isotope-style filtering logic. Configured secure contact form with CSRF protection, form validation, and Django form processing. Optimized static file handling using Django Whitenoise and Nginx for efficient production-ready deployment. Fully deployed on AWS EC2 instance with Gunicorn, Nginx, systemd, and PostgreSQL database integration. Managed source code and deployment lifecycle using Git, GitHub, and SSH remote synchronization.

- Django Tailwind Blog - Developer Portfolio Site - Created a complete developer portfolio website with Django backend and TailwindCSS frontend. Created dynamic blog, project showcase, categories, and contact form and implemented custom error handling. Built Django models, templates, and PostgreSQL database integrations. Deployed responsive web application with static/media file management.

Cover Letter

Date:

To:

Dear ,

I am excited to apply for the Data Engineer position at . With over one year of hands-on experience in building scalable data pipelines, transforming raw data into actionable insights, and managing cloud-based infrastructures, I am confident in my ability to contribute significantly to your team's success.

Throughout my internship at Accenture, I gained valuable experience in working with AWS, Azure, GCP, and big data technologies like Apache Spark. I was responsible for developing machine learning models, optimizing ETL pipelines, and designing cloud data architectures. Additionally, my knowledge of SQL, Python, and tools like Apache Airflow and dbt has allowed me to build robust data engineering solutions, which I believe would be an asset to your team.

Key Skills

- Extensive expertise in AWS, Azure, and GCP platforms

- Proven proficiency with Python, Apache Spark, and SQL

- Strong experience with data warehousing solutions such as Snowflake and BigQuery

- Experience with Django web development and PostgreSQL integration

Highlighted Projects

-

Real time voting data engineering project

This repository contains the code for a realtime election voting system. The system is built using Python, Kafka, Spark Streaming, Postgres and Streamlit. The system is built using Docker Compose to easily spin up the required services in Docker containers.

-

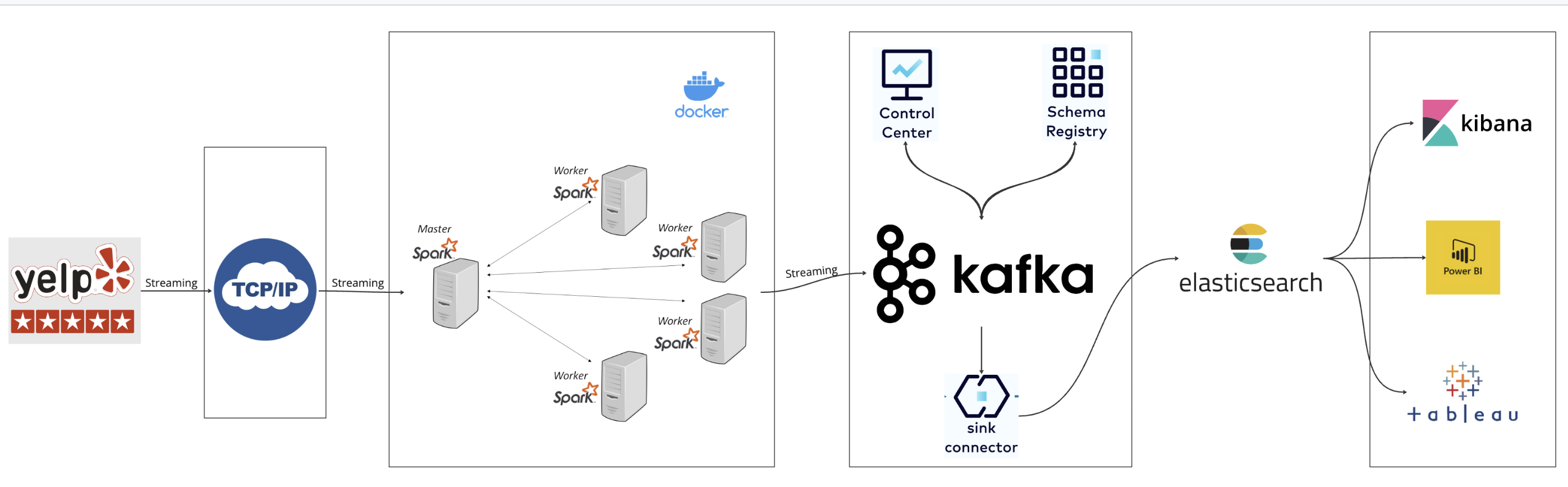

RealtimeStreamingEngineering

Data Pipeline with Reddit, Airflow, Celery, Postgres, S3, AWS Glue, Athena, and Redshift

-

Sparkify ETL Pipeline with Airflow and Redshift

This project builds a complete ETL pipeline to ingest Sparkify’s music data into an AWS Redshift data warehouse. It uses Apache Airflow for orchestration and scheduling. Data is extracted from AWS S3, transformed using custom Python scripts, and loaded into a star schema in Redshift to support analytical queries about user activity and song preferences.

-

Digital CheckIn System

A complete employee management and attendance system built with Django. The application allows employee registration, face recognition-based attendance marking, leave request management, department management, and admin dashboards for full control. Employees can update their profile, while admins can monitor attendance records, approve leave requests, and manage employee data efficiently.

-

Full-Stack Django Personal Portfolio with AWS Deployment

Designed and developed a full-stack personal portfolio website using Django as backend and Bootstrap 5, AOS, and custom JavaScript libraries for interactive frontend. Implemented complete CRUD operations for dynamic project management using Django Admin interface. Integrated media upload functionality for project images and GitHub repository linking for each project entry. Enabled real-time category-based filtering of projects using Django ORM, slugify filters, and Isotope-style filtering logic. Configured secure contact form with CSRF protection, form validation, and Django form processing. Optimized static file handling using Django Whitenoise and Nginx for efficient production-ready deployment. Fully deployed on AWS EC2 instance with Gunicorn, Nginx, systemd, and PostgreSQL database integration. Managed source code and deployment lifecycle using Git, GitHub, and SSH remote synchronization.

-

Django Tailwind Blog - Developer Portfolio Site

Created a complete developer portfolio website with Django backend and TailwindCSS frontend. Created dynamic blog, project showcase, categories, and contact form and implemented custom error handling. Built Django models, templates, and PostgreSQL database integrations. Deployed responsive web application with static/media file management.

Thank you for considering my application. I look forward to the possibility of discussing how my skills and background can contribute to your team's success.

Sincerely,

Akhil Bonthinayanivari

Projects

Explore some of the key projects I've worked on, showcasing my expertise in various technologies.

- All

- Python

- Django

- Odoo

- JavaScript

- Data Engineering

- Web Development

- Machine Learning

Real time voting data enginee…

Python, JavaScript, Data Engineering

This repository contains the code for a realtime election voting system. The system is built using Python, Kafka, Spark Streaming, Postgres and Streamlit. The system is built using Docker Compose to easily spin up the required services in Docker containers.

RealtimeStreamingEngineering

Python, Data Engineering

Data Pipeline with Reddit, Airflow, Celery, Postgres, S3, AWS Glue, Athena, and Redshift

Sparkify ETL Pipeline with Ai…

Python, Data Engineering

This project builds a complete ETL pipeline to ingest Sparkify’s music data into an AWS Redshift data warehouse. It uses Apache Airflow for orchestration and scheduling. Data is extracted from AWS S3, transformed using custom Python scripts, and loaded into a star schema in Redshift to support analytical queries about user activity and song preferences.

Digital CheckIn System

Python, Django, JavaScript, Web Development, Machine Learning

A complete employee management and attendance system built with Django. The application allows employee registration, face recognition-based attendance marking, leave request management, department management, and admin dashboards for full control. Employees can update their profile, while admins can monitor attendance records, approve leave requests, and manage employee data efficiently.

Full-Stack Django Personal Po…

Python, Django, JavaScript, Web Development

Designed and developed a full-stack personal portfolio website using Django as backend and Bootstrap 5, AOS, and custom JavaScript libraries for interactive frontend. Implemented complete CRUD operations for dynamic project management using Django Admin interface. Integrated media upload functionality for project images and GitHub repository linking for each project entry. Enabled real-time category-based filtering of projects using Django ORM, slugify filters, and Isotope-style filtering logic. Configured secure contact form with CSRF protection, form validation, and Django form processing. Optimized static file handling using Django Whitenoise and Nginx for efficient production-ready deployment. Fully deployed on AWS EC2 instance with Gunicorn, Nginx, systemd, and PostgreSQL database integration. Managed source code and deployment lifecycle using Git, GitHub, and SSH remote synchronization.

Django Tailwind Blog - Develo…

Python, Django, JavaScript, Web Development

Created a complete developer portfolio website with Django backend and TailwindCSS frontend. Created dynamic blog, project showcase, categories, and contact form and implemented custom error handling. Built Django models, templates, and PostgreSQL database integrations. Deployed responsive web application with static/media file management.

Contact

I am always open to new opportunities, collaborations, and challenging projects. If you would like to connect, please feel free to reach out via any of the contact options below.